The speed of human thought, and its implications for brain-computer interfaces

The speed of human thought, and its implications for brain-computer interfaces

The speed of human thought, and its implications for brain-computer interfaces

Life in the slow lane

Matt Angle

In "The Unbearable Slowness of Being…" Zheng and Meister discuss an unnerving paradox of human behavior. Though our sensory system ingests gigabits per second, our perception and behavior seems to bottleneck consistently at less than 50 bits per second (bps), across a diverse range of cognitive tasks. Human psychophysics experiments estimate that we extract information from our environment at a rate of tens of bits per second. Behavior experiments similarly estimate that our movements are selected from a repertoire of approximately 2^10 possible actions per second. One of the most complex things we do is speak, and human speech has a bit rate of approximately 40 bps when transcribed. These numbers are staggeringly low compared with the data rates we associate with modern networking speeds. In a world where 1 Mbps internet feels stifling and frustrating, how is it possible that we live our lives at only 10-50 bps?

It isn’t a coincidence that Zheng and Meister chose to title their essay after a piece of existentialist literature. Their conclusions are unsettling. Culture has long cast the body as limiting the mind, but Zheng and Meister's analysis suggests the opposite may be true. If we accept 10-50 bits per second as the information transfer rate tied to human behavior, then our minds occupy a very limited decision space relative to the capabilities of our bodies. Our sensory systems already ingest information at gigabit rates—many millions of times faster than our supposed ability to respond to stimuli—so increasing sensory input is unlikely to raise our cognitive throughput. Even considering just 14 major joint groups (shoulders, elbows, wrists, hips, knees, ankles, spine, neck) with only 10 possible positions each, sampled at a modest 10 Hz, describing basic human posture requires ~465 bits per second, an order of magnitude more data than our brains have been shown to process. Now consider that many joints can be controlled at angular resolution of < 1 degree, and the entire body has 200+ degrees of freedom (though biomechanical constraints prevent many theoretical combinations). Does this seem surprising? Try writing two sentences with different hands while speaking. Your body can do it. The extreme limitations of our minds relative to our bodies seem to upend the brain-computer-interface-as-transcendence narratives that assume humans are I/O-limited. In Zheng and Meister’s world, it is our bodies that are being limited by our feeble minds. The best candidate for replacement may be—our thinking.

Super intelligent aliens could read 27,000 minds on a Gameboy

If we accept that the information transfer rate of human behavior is < 100 bps, interesting implications emerge for Brain-Computer Interface (BCI) technology. For instance, if you had a whole-brain interface with complete access to your thoughts, the BCI could decode anything you want to do and transmit that information over a Bell Telephone 100 bps modem from 1959 without losing any information. Getting a bit weirder, the 1989 Gameboy had 23,040 pixels and 2-bit shading, which means that in a single frame, it could encode and display 46,040 bits of data. Gameboy has a refresh rate of ~60 frames per second, which means that it has the capability to visualize information at 2,762,400 bps. In other words, super intelligent aliens could monitor the thoughts of 27,624 people by plugging their BCIs into a Gameboy and watching the screen. (Alas, we are not able to offer alien monitoring capabilities due to an exclusivity agreement between the aliens and another major BCI company.)

At this point, I should clarify something about data rates: even if human behavior is low-data rate, it doesn't follow that you can read activity out directly with a low-data rate BCI. In order to extract the salient features of brain activity, it must still be acquired at a reasonably high resolution. Think of a police speed trap along the highway: law enforcement use cameras to capture high resolution images of license plates, but once your license plate is read, only 8 bits per character is needed to transmit your license plate number to the billing department. Just as you couldn't capture license plate numbers with an 8-pixel camera, you can't assume that because humans decide things at 10-ish bits per second you can buy an EEG headband from Amazon and begin mind-uploading.

Outer brain – inner brain

What are humans doing with their brains if they take in so much data and in the end do so little? Comparing our own brains to those of other animals that do not write poetry or solve math proofs, we can infer that much of our brain is needed for taking in information from the noisy environment and controlling our complex bodies. This is what Zheng and Meister refer to as the "outer brain," contrasting it with the "inner brain" that integrates this information, attends to the task-salient features, and ultimately makes decisions. In effect, our minds are shaped like hourglasses, with wide funnels to take in information, a compact inner representation of the world, and a fan-out to lots of independently controllable muscle units. Deep learning enthusiasts might analogize that our "inner brains" are a bit like the middle layer of an autoencoder.

While the distinction between inner brain and outer brain helps frame how the brain processes information from a data perspective, it creates a false sense of separation that isn't cleanly defined by anatomy. Consciousness does not reside in a single brain area—it is likely an emergent property of the entire distributed system. The sensory inputs that feed our decision making are not separate from our conscious experience; they are most likely part of it. The act of doing things is not simply downstream execution from our conscious minds. Complex action involves continuous feedback loops between sensory and motor systems, and this high-dimensional dynamic process is itself part of our rich conscious experience.

This has important implications when we start thinking about interacting with the brain through BCI. Since there isn't a single inner brain area to plug into, we must find ways to interface with the brain's existing structure, and we must consider not only the raw amount of data necessary to complete a task, but the richness of sensory experiences that may feed into our more distilled representations, as well as how completing that task will be subjectively experienced.

Do we really need high-data rate BCIs if our brains are so slow?

Zheng and Meister are skeptical about the direction of the BCI field. Given that our inner brains can’t handle more than 10ish bits per second, why are BCI companies working to establish high data rate interfaces with the sensory and motor systems? Are they overengineering? They note that efforts to create a high-resolution visual interface have stumbled and suggest:

“...we know that humans never extract more than about 10 bits/s from the visual scene. So one could instead convey to the user only the important results of visual processing, such as the identity and location of objects and people in the scene. This can be done comfortably using natural language...”

Agreed, a language-based interface is probably a more useful assistive tool for people with vision loss than an implantable device that can stimulate 100 tiny flashes of light (phosphenes) in the visual field. Additionally, we should continue working on high channel count BCI systems that can one day restore the visual experience to people who have lost their sight. People do not experience words and vision the same way, and for people who want to see again, it offers little consolation to explain that much of what they remember about their kids’ faces was subjective inflation.

Zheng and Meister also have a take on motor prosthetics:

“...the vast majority of paralyzed people are able to hear and speak, and for those patients language offers a much simpler brain-machine interface. The subject can write by simply dictating text to the machine. And she can move her exoskeleton with a few high-level voice commands. If the robot thinks along by predicting the user’s most likely requests, this communication will require only a few words (“Siri: sip beer”), leaving most of the language channel open for other uses. The important principle for both sensory and motor BCIs is that one really needs to convey only a few bits per second to and from the brain, and those can generally be carried by interfaces that don’t require drilling holes in the user’s head.”

Yes, voice-activated home automation is a great way for people living with paralysis to regain autonomy, and building voice-activated exoskeletons would be a great idea. AND many people living with paralysis would like to have a more embodied experience of controlling robotic limbs and exoskeletons. Not everyone wants a beer poured into their mouth. Furthermore, the broad promise of BCI is not only to restore basic function to individuals with severe disabilities, but to open a range of new applications (medical and non-medical) based on seamless technology integration. While short-term solutions for assistive care are valuable pursuits, they stop short of the larger ambitions of BCI.

Using an AI copilot to assume majority control of motor tasks is an attractive intermediate solution while motor-BCI solutions are still immature, but these solutions come at the cost of agency—the fundamental human desire to be the driver in your own actions rather than a passenger.

No one who is sighted wants to permanently swap their vision for verbal narration, and no one who can move wants to permanently hand over control of their body to an AI copilot. We shouldn't expect people living with disabilities to accept those kinds of tradeoffs either. Pragmatic short-term solutions are valuable, but we should also continue building robust, high data rate platforms that restore not only essential functionality but naturalistic experience.

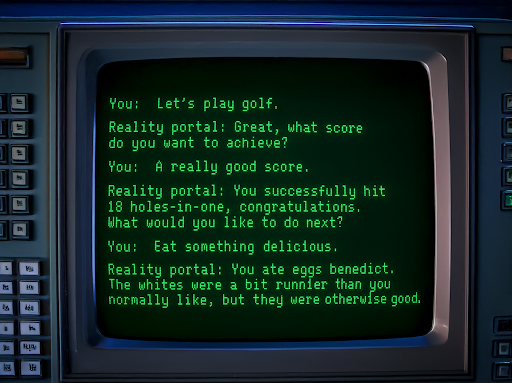

If we ignore user experience and anchor ourselves on purely task-based metrics, then present day (intracortical) BCI data rates are already sufficient for us to abandon our bodies. We can connect to robot avatars and exchange all of the data we need through a chat-based interface at communication rates on par with average typing and texting speeds:

The experience could be marginally enhanced with an LLM-version of Virginia Woolf providing narration, but the fact remains that reading about the Grand Canyon is not an equivalent experience to seeing it, telling a robot to play golf for you is not as much fun as playing, and reading about delicious food is actually downright frustrating when you aren't tasting it.

Embodiment as bandwidth

In the final section of their paper "Outer brain vs inner brain" the authors point out that the experiments through which we view the brain can already impose a bias upon how we interpret its function. Interrogating sensory systems allows for presentation of complex stimuli with tight control of stimulus timing, and as a result we can see that neurons in the auditory and visual systems have complex tuning curves and encode information with precise timing. Experiments in the motor system involve relatively simple behaviors and a fair amount of trial-to-trial variation, and not surprisingly, we find low-dimensional representations and less temporal precision across tasks. The authors rightly ask whether the ways that we currently interrogate the "inner brain" could also be influencing the ways we quantify decision-making in the context of the brain.

Consider this example: imagine a carnival game called CAT DOG BASKETBALL. The game has two basketball hoops and a big overhead TV screen. The player is standing ten feet from the hoops, and a ball return is feeding him basketballs. When the screen shows any picture of a CAT, the player shoots a basket into the left hoop. When the screen shows a DOG, the player shoots the ball into the right hoop.

This is a binary discrimination task. The information transfer rate within the "inner brain" is one bit per image presentation.

The player's experience, however, is more complex, because the player's experience is a combination of inner and outer brain processes. He does not know a priori which dog or which cat picture might appear on the screen, and the animal might appear in a complex scene. He is switching focus between the screen and the basketball hoops, and as the image presentation rate increases, attention becomes important.

Even after his decision is made, he needs to make the basket, which involves a non-trivial motor control task that integrates visual information, proprioception, and touch. While the command is being executed the cerebellum is looking to make sure that actual movement of the body matches the intended movement. This sensory-motor feedback loop is not "inner brain" as defined by Zheng and Meister, but it is part of our "inner experience", and it is much higher bandwidth than 10 bps.

Viewed from the narrow information theory perspective of the "inner brain," this entire rich experience reduces to a one-bit command. A blind, paralyzed player with a camera and a robotic assistant could play the game functionally with a simple BCI. But the game itself—the challenge, the embodiment, the fun—would be entirely lost.

Reality portal: "It's a cat."

You: "Left."

Reducing the human experience to the information transfer rate of the "inner brain" can be as reductive as you choose to frame the paradigm. Hamlet famously grappled with his decision "to be or not to be," and objectively, he spent his entire soliloquy vacillating over a simple binary discrimination task.

Even if people only make decisions at a certain data rate, it does not mean that they would be happy having their sensory and motor systems reduced to that same data rate. While we do not simultaneously attend to 1 gigabit per second, having that much data available in primary sensory areas allows us to quickly extract what we need. While our day-to-day behavior may be relatively stereotyped, the ability to control the full degrees of freedom of our musculoskeletal system allows us to learn new skills and navigate new situations.

Implications for future BCIs

The extreme amount of data compression that occurs in the brain leading up to behavior has profound implications for BCI design. It suggests that somewhere between the "outermost" brain areas and "innermost" brain areas there may be high level representations that can be accessed to provide sensory inputs that "feel" rich and granular while requiring substantially less data than would otherwise be required to create the same perception in an upstream brain area. In other words, it may be possible to engineer compelling subjective experiences with less data by targeting the right processing levels. There may also be areas involved in motor planning that can interface with AI-enabled robotics in a way that leverages non-biological control strategies without feeling disembodied. If these research paths prove successful then—from a data and engineering standpoint—BCI may be closer than expected to enabling exciting new applications.

Even if we accept the proposition that the brain only processes tasks at 50 bps or less, this does not mean that higher data rate brain-computer interfaces are unnecessary. Performing tasks at a certain speed does not mean that our experience would be equivalent if our sensory and motor systems were throttled to that same speed. Glasses, contact lenses, stroke rehabilitation, hearing aids, high resolution TVs, and broadband internet all exist as products because human experience is not limited to “inner” brain activities. People do not like having their sensory or motor capabilities reduced, and we perceive limitations at data rates much higher than 100 bps.

The convergence of high-resolution neural interfaces, advanced signal processing, and AI-enabled control systems will ultimately result in fewer tradeoffs and richer experiences for patients with BCI-based therapies. Intracortical recording offers the most direct route to higher data rates. It already sets the benchmark for BCI application performance by a wide margin, and the underlying physics suggest this advantage will persist. At Paradromics, we are building hardware that turns proven science into new applications today, while enabling academic researchers to unlock future applications through new science. Our goal is not only to restore basic function, but to restore and enhance the full spectrum of human agency and experience that makes our slow lives worth savoring.

Read more by Matt Angle on Substack.

%20(1).svg)