AI is not the bottleneck in BCI

AI is not the bottleneck in BCI

AI is not the bottleneck in BCI

Rapid advances in AI make BCI hardware development even more important

Matt Angle

Building fully implantable, high data rate brain-computer interfaces (BCIs) is extraordinarily difficult. So difficult, in fact, that arguably only two companies are pursuing it seriously. Paradromics is one of them.

In our previous post, "The Speed of Human Thought," we made the case that high-resolution BCIs are still needed despite the fact that human cognition and behavior outputs at 10-50 bits per second (bps). We argued that human experience encompasses far more than just information transfer—it includes the richness of sensory processing that feeds into it and the agency of full embodied control.

This leads to a related question we frequently encounter, especially from investors evaluating different BCI approaches:

"If ChatGPT can write a novel from a few-word prompt, why can't AI transform weak EEG signals into high performance complex control signals? Why invest in brain surgery when software might solve everything?"

This question cuts to the heart of how we think about artificial intelligence and brain-computer interfaces. Can AI truly overcome data quality limitations? Here we explain why data quality remains critical in the age of AI, and how the bottleneck for BCI today is data, not algorithms. As the fields of AI and BCI converge, data quality becomes more—not less—valuable.

Garbage in, genius out?

There's an old saying in computer science: "garbage in, garbage out." No matter how sophisticated a program, its output is only as good as its input. Yet many advances in generative AI seem to defy this logic. A simple or vague prompt can generate stunning, complex output. Asking "Write me a story about a dog" produces a vivid narrative full of details never imagined by the prompter. It seems like AI doesn't really need much data.

Consumers of crime shows will be familiar with the "ENHANCE IMAGE" button that converts blurry security footage into crystal clear images of the suspect. In these shows, algorithms can read a person's nametag even when the suspect's torso was only two pixels wide in the original image. Is this now possible with advanced AI?

AI can do a lot with a little, but it cannot overcome fundamental physical and informational limits. When we ask an LLM to provide more information than was contained in the original prompt, we're prompting it to be creative or to hallucinate. So how do we know when AI is extracting deep hidden truths from the data versus simply fabricating plausible details? The answer lies in understanding what AI models actually do—and their fundamental limitations.

I’m dating a model

Understanding the limits in AI models starts with defining what a model even is. In AI, "model" has the same meaning as "I built a model of the solar system in third grade." It's a simplified representation of something real, used for informational or operational purposes. A language model, for instance, maps out relationships between words and predicts how they fit together.

A good model can reconstruct degraded inputs, provided enough information exists. For example, you can still understand the sentence, "never wipe your a** with poison ivy" even if some letters are missing—your brain fills in the blanks. A good model can also "undo" certain data transformations: smartphone cameras have optical distortions that can be undone digitally.

There are limits to what AI can do however. If you take a short exposure photo in a dark room, the picture will be grainy and blurry. AI can try to fill in the missing information, but fundamentally, it’s making a guess based upon patterns it’s learned from prior data. For example, if the missing information is the words on a sign, AI will be making an educated guess. It can make a good guess about the word “stop” on a stop sign, given the shape and location of the sign. However, if the sign provides real-time traffic updates, AI will be making up the details and you’ll probably make the wrong choice and be late getting to your destination.

Even models trained on vast datasets still need a minimum amount of input data to function. A director and production crew might be a "generative model" for movies, but they still need a script. No matter how advanced an AI model is, it still requires detailed and clear input data if you want detailed and specific output.

Something from nothing

When you give a vague prompt to a generative model like ChatGPT, it can generate a highly specific output, but the details come from the model itself. If you ask for a picture "of a dog," the AI draws from a probability distribution of what dogs typically look like and delivers something "dog-like." Repeating the prompt will yield different dogs. Only when you specify further, "a red Doberman with uncropped ears, wearing a string bikini and holding a machine gun," do you gain more control over the output.

In some cases, we don't care about these details. Stock images for presentations work fine without precise user control. But in other cases, details matter. You wouldn't want ChatGPT to fill out your tax return without first providing factually accurate inputs. Similarly, an AI image generator can "ENHANCE IMAGE" indefinitely, but any new details that weren't in the original image were hallucinated. If the suspect's face emerged from only a few pixels, then CSI Miami probably kicked in the wrong door.

Some situations call for more than accuracy—they require agency and authenticity. An AI could impersonate you and check in with your friends and family during important events in their lives, It could probably do a good job at even capturing your personal tone “Congratulations on your new baby, dude!” But in delegating your social life to AI, you'd miss out on genuine human connection. Rather than talking to you, your loved ones would be talking to a model.

Preserving agency is crucial to making BCIs feel like seamless extensions of ourselves rather than external disembodied agents. That requires a reasonable degree of information throughput. If a BCI system only reliably detects close hand or kick leg, but is being asked to provide text outputs, it will either be tediously slow or it will fill in the vast majority of the text from its AI imagination.

AI models interpret the world through the lens of their training, and when input data is insufficient, they don't reveal hidden truth—they fabricate plausible fiction. This brings us to a key question: when does performance hinge on the model itself, and when is it fundamentally limited by the data it receives?

Training data vs. input data

When we discuss information-limited systems in this blog, we’re referring to input data during inference—what the model needs to generate a useful response. This data quality requirement is independent of the data used for model training. In fact, when we discuss information-limited systems we can assume for arguments sake that the model is trained perfectly from an infinite amount of training data. AI models still require data with sufficient quality to be input at inference time. Improvements in AI technology can make models train faster, but they cannot extract information that was never recorded.

Meta’s EMG wristband controller further illustrates this distinction. Rather than reading the brain directly, the sensor reads electrical activity from muscles in the forearm. Years ago, it was shown that muscle activity based recordings from this device, when interpreted by a model carefully tuned for an individual user, could enable gesture-based computer control. In order to enable “out of the box” use, with no user specific tuning, Meta had to train a foundation model using data from thousands of users. After training, the model allowed for the same level of performance out of the box that previously would have required extensive training. Critically, however, the device still DID the same thing. Even after massive training, the device was ultimately limited by the information available to it from muscle recording in the forearm. For instance, it still wouldn’t work for an individual with paralysis (no EMG signal), and it wouldn’t allow for speech decoding. Those goals aren’t model-limited, they are information-limited by the device itself.

For clarity when discussing data limitations at the time of inference, we will refer to systems as information-limited if they lack the necessary information transfer rate to enable a task, even assuming a perfectly trained decoding model.

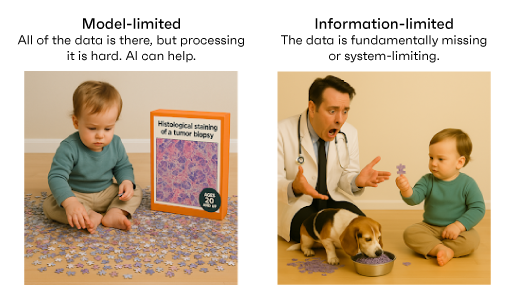

Model-limited vs. information-limited: What’s the difference?

To understand AI's impact on BCI, we must distinguish between model-limited and information-limited systems.

Model-limited systems are provided adequate input information to perform a task, but the structure of the AI algorithm, i.e., the model, that interprets the incoming sensor data limits final system performance. One clear sign that a system is model-limited rather than information-limited is if another system with access to the same input data can solve a task better. Optical character recognition and facial recognition, for example, were model-limited for years. Humans with access to high quality digital images are able to perform character recognition and facial recognition with high accuracy. Computer vision models had to be developed to perform those same tasks, and they improved over time—not as a result of better images—but as a result of better modeling and training. One of the big debates in self-driving cars centers around whether sensors other than cameras are necessary for full self-driving. Elon believes that cameras should be enough, because humans can drive cars using vision alone. In essence, he is asserting that Teslas are currently model-limited rather than information-limited.

Information-limited systems struggle because the necessary information isn't there. You can't listen to the radio with a banana. You can't use a normal light microscope to look at a virus without first modifying it in some way, and you can't pick out a single voice in a crowded stadium if you are across the street at a gas station. Even with the best AI tools, if the signal is too weak or too noisy, the system won't work.

A real-world example: If an image is slightly blurred using a known algorithm (or optics with known distortions), an inverse function might recover the original image. But if the blurring is too severe, restoring the image becomes impossible because it requires sensor accuracy that is not physically acheivable—it's now an information-limited problem (See: Ill-conditioned problems).

Theory and first principles can be very helpful in determining if a system is information-limited. For instance, if the system acts as a noisy information channel (i.e. a BCI transiting brain data to a computer) then it is possible to calculate information theoretic numbers such as information transfer rate and total channel capacity (see our Benchmarking scientific preprint for details). If the achievable and theoretical information limit of the system is low relative to the task you are trying to accomplish, then AI models will only be able to do so much. Using smoke signals to transmit high resolution JPEGs is possible, but at < 1 bit per second, the system performance will be information-limited and all of the downstream compute in the world won’t change that.

BCI: AI’s role depends on data quality

BCI performance depends on both coverage (which brain areas we record from) and density (how much information we extract from those areas). There is a direct analogy to imaging: you must take pictures of the things you want to learn about and you must also have sufficient information to learn about them. Just like imaging systems, not all BCIs are created equal, and some are “blurrier” than others.

Coverage

Different brain regions control different functions. Extracting useful information requires targeting the right area (e.g., the motor cortex for movement, the visual cortex for sight). Multi-modal BCIs, which interact with multiple regions, may need to interact with more than one brain region. For instance, a robotic hand with touch sensation requires access to both motor and sensory areas to be effective.

Density

Because brain activity is highly localized, the density of recorded information is critical. You can’t necessarily get more information about a given function by covering more brain area. If you want to predict an election result in Idaho, it is better to poll people in Idaho rather than polling nationwide.

Increasing information density in BCI necessarily means increasing resolution, the ultimate resolution being single neuron recording. To record single neurons, intracortical electrodes are placed inside the brain, under the cortical surface and within 100 micrometers of the neurons that are to be recorded. Since neurons fire in unique patterns (low correlations), adding more electrodes proportionally increases bandwidth.

The most performant intracortical BCI, demonstrated at UC Davis, has been used for real-world communication sustaining faster communication (30-60 wpm) with very little delay, essentially open vocabularies (100,000+ words), and lower word error rates (<5%).

Surface or endovascular electrodes—such as those placed inside blood vessels—only detect local field potentials (LFPs), which aggregate signals from many neurons. Since these electrodes pick up signals from afar, the recordings from nearby electrodes tend to be highly correlated. These recordings are a very blurry measurement of neuronal activity. Adding more electrodes yields diminishing returns, limiting their usefulness for high-bandwidth applications.

Clinical trials using Stentrodes show data rates at <1 bit per second (bps), enabling only 3-4 words per minute through specialized user interfaces. At 1 bps, generating outputs resembling normal speech (150-200 words per minute) is impossible without AI predicting nearly every word—stripping the user of control, agency and accuracy. By necessity, this means that stentrodes will be used differently than other forms of communication BCI.

ECoG-based BCIs operate at marginally higher data rates and achieve higher communication speeds, but must balance tradeoffs between error rates, vocabulary size, and speed. The highest performance demonstration of an ECoG-based BCI, from researchers at UCSF, used a small vocabulary (~1,000 words), effectively forming a constrained phrasebook (e.g., communicating like a tourist who only had time to read a portion of “German Phrases for Dummies” on their way to Germany). The data were so limited, they also had to introduce multi-second data integration delays to achieve word error rates of 25% in a highly controlled task. This is similar to waiting for a foreign language translator to hear an entire sentence before providing you with a translation.

A useful analogy to understand the performance gaps between intracortical and ECoG devices is to think about intracortical electrodes and surface electrodes like microphones. Imagine placing one microphone in a football stadium’s stands (intracortical) and another in the parking lot (surface). The stand microphone captures individual voices, while the parking lot microphone hears only the crowd's roar. Adding more microphones in the stands reveals new conversations, but adding more in the parking lot just re-samples the same crowd noises. The same is true for neural recording of “neuron conversations.”

BCI systems today are information-limited, and surface and endovascular systems provide substantially less data quality than intracortical systems. While more information-limited BCIs can benefit from AI models, those models will not make their performance anywhere close to equivalent to intracortical systems. Instead, in the absence of data, their applications must lean more on AI’s generative capabilities and less upon the agency and intent of the BCI user.

A note on Paradromics technology: on our current benchmarking tests in sheep auditory cortex, we have achieved data transfer rates of 200+ bps. While it is very difficult to compare data rates across tasks, that rate is still highly significant in that it shows that our hardware limited data ceiling is well above any previously demonstrated BCI trial. From transcripts of human speech, across languages, linguists estimate that spoken language transmits information at ~40 bits per second. Accounting for some sampling inefficiencies and the incorporation of pitch, loudness, and prosody, we are approaching the threshold where BCIs can achieve natural speech speeds without major tradeoffs.

When AI steps in

When signals are weaker, noisier, or aggregated (as with surface electrodes), they lose the granularity needed for advanced applications like speech decoding or fine motor control. No matter how good your algorithms are, they can’t recover details that were never recorded. The only way AI can overcome this limitation is by making a best guess based on context.

Generative models excel at filling in missing details, much like how language models help with communication BCIs. For example, a text-based BCI might only allow a user to select a single word at a time, but an AI-powered system could predict entire sentences based on context—just as your phone’s autocorrect suggests words before you type them. However, while this speeds up communication, it reduces agency. Instead of crafting a message word by word, users may find themselves picking from AI-generated suggestions—similar to choosing a Hallmark card instead of writing a personal note.

The same principle applies to movement. A motor BCI might not capture every small muscle intention, but AI can generate smoother, more natural-seeming movements. Imagine a system that helps a paralyzed user grab a cup: the BCI might only detect the intention to “reach,” while AI fills in the gaps to generate the full arm motion. But just as picking a greeting card isn’t the same as composing your own words, initiating a movement isn’t the same as controlling the movement. The more AI takes over, the less the user will feel in control.

Doing more with more

The future of BCI is not about choosing between smarter AI or better hardware. It depends on pairing the richest neural data with the most advanced models. AI will be essential for decoding and interpreting brain activity, but it cannot replace high-quality input data.

We are still in the earliest stages of what will likely become an exponential expansion of BCI applications. In this phase, each improvement in neural interface technology opens the door to entirely new categories of use cases. Just as each generation of modem technology transformed what the internet could deliver by enabling streaming, video calls, and cloud computing, future increases in BCI "download rates" will reshape what is possible.

Better AI models have not reduced the need for high-bandwidth, low-latency internet. In fact, they have increased the demand for data. The same principle applies to BCI. Better models make richer data even more valuable.

The human species is always looking to expand its horizons, and BCI will be no exception. We are within a generation of turning our most powerful sensors and algorithms into treatment for our most stubborn neurological conditions. The same technology offers the alluring possibility of expanding our consciousness beyond the model and data limitations of our native biology. The movement in the field toward more data is inexorable, and AI will be an accelerating factor rather than a substitute.

For media inquiries, please contact: media@paradromics.com.

Read more by Matt Angle on Substack.

%20(1).svg)